Table of Contents

- 1 Understanding Google Core Updates… What are they anyways?

- 2

- 3 Common Quality Concerns on Websites

- 4 8 Reasons Why Understanding the Google Quality Rater Guidelines (QRG) is Crucial for an Effective SEO Strategy:

- 5 Interpreting Changes in User Intent

- 6 Decoding Google’s Approach to Recognizing and Assessing Authors via E-E-A-T

- 7 Decoding Google’s E-E-A-T Metrics: A Deeper Dive into Relevant Patents and Author Evaluations/Ratings

- 8 Here’s a practical checklist of items that agencies should focus on implementing into their SEO strategy:

Understanding Google Core Updates… What are they anyways?

In today’s digital landscape, which sometimes feels a lot more like a labyrinth, understanding Google’s frequent algorithm shifts is paramount for marketers. As the digital giant continually refines its search systems, agency’s must adapt to stay relevant and keep SEO results trending in the right direction in as many of the campaigns as possible.

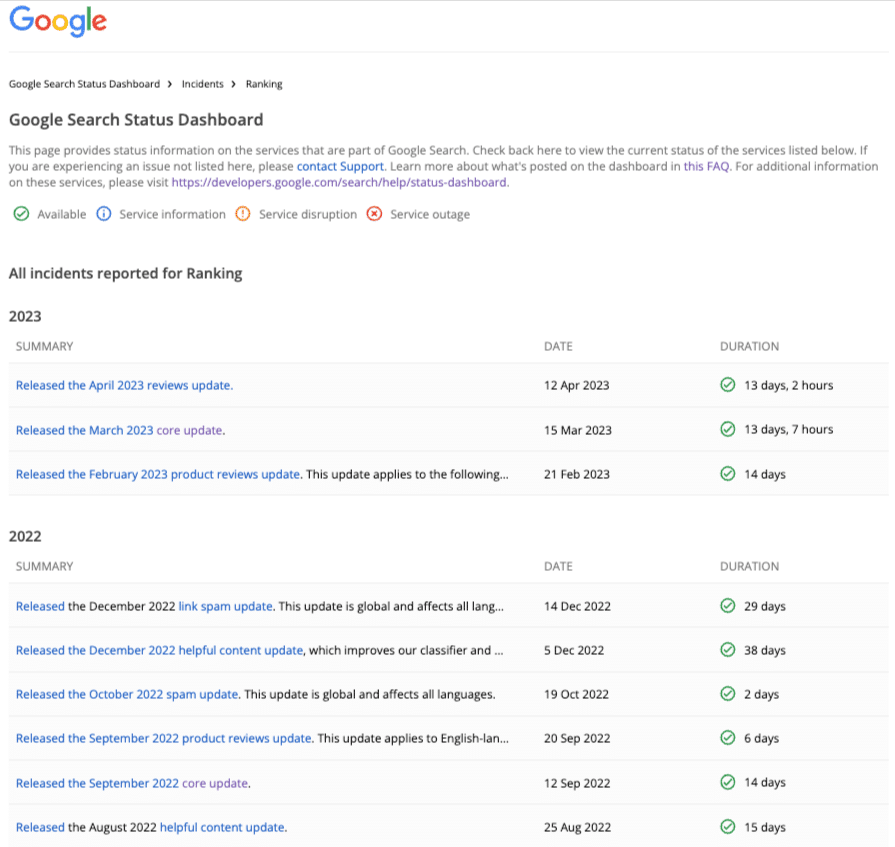

Here are the major documented core updates for the last 12 months…

Google Core Updates: A Comprehensive Overview

Google Core Updates refer to significant modifications made to Google’s primary search algorithm. These updates are designed to improve the relevance and accuracy of search results by refining the criteria used to rank websites. Unlike minor daily updates, Core Updates can profoundly impact search rankings across a wide range of websites.

The primary objective of these updates is to provide users with the most relevant and high-quality content that matches their search intent. To achieve this, Google often revisits its content quality and relevance evaluation. Factors such as the accuracy of the information, the author’s expertise, the content’s comprehensiveness, and the overall user experience on a website can all play a role in how a site is ranked post-update.

It’s essential to understand that Core Updates don’t target specific websites or niches. Instead, they aim to improve the overall search experience for users. As a result, while some websites might see a drop in their rankings, others could experience a boost. Google’s advice for websites affected by Core Updates is consistent: focus on producing high-quality content that offers genuine value to users.

The reality on the street, so to speak, is that it’s not uncommon for numerous client websites to experience a drop in rankings after any of these “core” updates, but don’t count out even small updates. In either case, these drops are “signals” from the algorithm indicating there are areas of the entity’s web presence that need improvement; or, indicating a new direction by the algorithm that is rewarding something different that your website or entity presence is lacking in as compared to the other choices (ie. pages or GBP listings or images, etc).

What’s challenging about all of this is that Google Search Console gives very little feedback or information when there are issues that negatively affect the search rankings for one particular entity/website/GBP listing. Oftentimes, we as agency owners need to put on the inspector cap and go to work looking for the cracks, the gaps, the new “unhelpful” pages of content that Google hasn’t identified for you but something is wrong and we can see it with our eyes in the rankings. So let’s go into some of the common things you should be looking for next.

Common Quality Concerns on Websites

During core updates, several prevalent issues might be highlighted/targeted:

- Intrusive ads or popups that hinder user experience

- Shallow or redundant content (ie. duplicate content can be a form of this too)

- Numerous subpar local landing pages

- Content that doesn’t adhere to E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) standards

- Deceptive affiliate links

- Overly promotional “money pages”

- Content that is written for SEO and is clearly obvious in the way it’s phrased/written

If we want to be instructed on what Google considers a “quality” page of content, the best instruction will come directly from the Quality Rater Guidelines.

8 Reasons Why Understanding the Google Quality Rater Guidelines (QRG) is Crucial for an Effective SEO Strategy:

- Insight into Google’s Perspective: The QRG provides a comprehensive view of what Google considers high-quality content. While the guidelines are used by human raters and not directly by the algorithm, they reflect the ideals Google aims to achieve with its algorithm updates.

- Emphasis on E-E-A-T: The guidelines place significant emphasis on Expertise, Experience, Authoritativeness, and Trustworthiness (E-E-A-T). By understanding these concepts, you can tailor your content to showcase expertise and authority in your field, which can enhance your site’s perceived quality. If you want a deep dive into E-E-A-T exclusively, here is a comprehensive guide to E-E-A-T I released on this topic a few months ago.

- User-Centric Focus: The QRG stresses the importance of meeting user needs. By aligning your content strategy with this principle, you ensure that your content genuinely serves your audience, which can lead to better user engagement and, consequently, better rankings.

- Guidance on YMYL Pages: The guidelines hold Your Money or Your Life (YMYL) pages to higher standards due to their potential impact on users’ well-being. If your site has YMYL content, understanding the QRG can help you ensure that such content meets Google’s stringent criteria.

- Avoiding Low-Quality Indicators: The QRG outlines characteristics of low-quality pages, such as misleading page design, lack of E-E-A-T, and unhelpful main content. By understanding these pitfalls, you can avoid them in your content strategy.

- Holistic Approach: The guidelines encourage a comprehensive view of quality, considering not just the content but also the user experience, site design, and functionality. This holistic approach can guide you to optimize all aspects of your site, not just the content.

- Adapting to Changes: SEO is a dynamic field, with search algorithms constantly evolving. The QRG can serve as a foundational document to help you adapt to these changes, ensuring your strategy remains aligned with Google’s objectives.

- Building Trust: The guidelines emphasize the importance of transparency and trustworthiness. By understanding and implementing these principles, you can build trust with your audience, which can lead to better brand loyalty and user engagement.

4 Ways this Knowledge Can Assist Agency Owners in Developing a Better SEO Content Strategy:

- Content Creation: By understanding what Google considers high-quality content, you can tailor your content creation process to meet these standards, ensuring your content is informative, accurate, and valuable to users.

- Content Auditing: Regularly review your content against the QRG to ensure it remains compliant with Google’s quality standards, updating or removing content that doesn’t meet these criteria.

- Expertise Showcasing: Ensure that content creators or contributors are recognized experts in their fields, and highlight their credentials and experience where relevant. Leverage Digital PR especially in this endeavor. If you can afford traditional PR for earned media, that’s another very useful layer. I would be remiss if I didn’t recommend Signal Genesys here for this component as it’s very effective at helping to build authority and credibility for an author, or a company, or both.

- Future-Proofing: By aligning your content strategy with the QRG, you can better anticipate future algorithm changes, ensuring your strategy remains effective in the long term.

In short, understanding the Google Quality Rater Guidelines provides a roadmap to creating content that not only ranks well but also genuinely serves users, ensuring long-term success in your SEO endeavors. Hopefully, I have made a strong enough of a case here to inspire you and any SEO team members actually to READ through the entire QRG document. Here’s the link to the full PDF! Happy reading!

For purposes of this article, here are some key concepts that you should understand from the Google Quality Rater Guidelines:

- E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness): This is a crucial metric in the guidelines. Websites and content creators are evaluated based on their demonstrated experience and expertise in a particular subject, the authoritativeness of the content and the site, and the overall trustworthiness in terms of accuracy and credibility.

- YMYL (Your Money or Your Life): Pages that could potentially impact a person’s health, happiness, safety, or financial stability are considered YMYL. These pages are held to higher standards because of their potential impact on users’ lives.

- Beneficial Purpose: Quality raters are instructed to evaluate whether a page has a beneficial purpose, meaning it should provide value to users and not be created solely for ranking well in search without providing genuine, useful content.

- Page Quality Rating: Raters are asked to rate the quality of pages from “Lowest” to “Highest” based on various factors, including E-E-A-T, the main content’s quality and amount, website information and reputation, and more.

- Who is responsible for the main content: This is a foundational focus for the evaluator. They want to identify an individual responsible for the content on the page. Who is the author is essentially the first question and then the next step… what is the reputation, experience and expertise of the author of the content?

- Needs Met Rating: This evaluates how well a result meets the user’s query intent on mobile searches. Results can range from “Fully Meets” (fully satisfies) to “Fails to Meet” (doesn’t satisfy the user’s query at all).

- Comparative Queries: Raters are sometimes asked to compare different results to determine which one better meets the user’s intent.

- Flagging Inappropriate Content: Raters are also trained to identify and flag content that might be offensive, upsetting, or inaccurate.

It’s important to note that while these guidelines provide insights into what Google considers high-quality content, they don’t directly dictate search rankings. Instead, they help Google’s engineers understand potential flaws in the algorithm and make necessary adjustments. For me, they are highly instructive and they provide a window of insight into what Googlebot and the algorithm systems are doing in automated ways without humans intervening.

Google’s perception of “quality, helpful content” is multifaceted. While the exact algorithms are proprietary and continuously evolving, the Google Quality Rater Guidelines provide a comprehensive overview of what Google considers high-quality content.

Here are the hallmark components of quality, helpful content:

- Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T):

-

- Experience: Has the content been produced based on actual use of a product? Does it reflect firsthand knowledge, like having visited a place or conveying personal experiences?

- Expertise: Content should be created by individuals knowledgeable in the topic. For some topics, formal expertise might be necessary, while for others, personal experience or passion can suffice.

- Authoritativeness: The content and the website hosting it should be recognized as an authority on the subject. This can be gauged by mentions, references, or citations from other authoritative sources.

- Trustworthiness: The content and the site should be deemed trustworthy, meaning the information is accurate, the site is secure, and there’s transparency about who’s behind the website.

- High-Quality Main Content (MC): The primary content should be well-researched, detailed, and provide valuable information that fulfills the user’s search intent. It should be free from factual errors and be presented in a clear, comprehensible manner.

- Positive Website Reputation: A website’s reputation, based on reviews, references, or mentions from authoritative sources, can influence the perceived quality of its content.

- Beneficial Purpose: The content should serve a beneficial purpose, whether it’s to inform, entertain, express an opinion, sell products, or offer a service. It shouldn’t be created with the sole intent of gaming search rankings.

- Comprehensive and Thorough: Quality content often covers its topic in depth, addressing multiple facets or viewpoints and answering a wide range of user queries related to the topic.

- Presentation and Production: The content should be well-presented and free from distracting ads or design elements. It should be easy to read, with a logical structure, and be free from excessive grammatical or spelling errors.

- Mobile Optimization: Given the prevalence of mobile searches, content should be easily accessible and readable on mobile devices.

- Supplementary Content: Features like navigation tools, related articles, or other resources that enhance user experience can contribute to the perceived quality of a page.

- Safety and Security: A quality website should not have malicious software, deceptive practices, or elements that compromise user safety or their security.

- Freshness: Depending on the topic, updated content can be crucial. For instance, information about medical treatments, laws, or current events should be current and reflect the latest knowledge or developments.

- User Engagement: While not directly mentioned in the guidelines, it’s inferred that content which keeps users engaged, leading to longer page visits, lower bounce rates, and more interactions, is likely considered of higher quality.

- Schema: Proper schema implementation is “helpful” to Googlebot exclusively, not to humans. Using schema is incredibly instructive to Googlebot so use it but use it right. Too many people don’t implement schema properly. That’s an entirely different article.

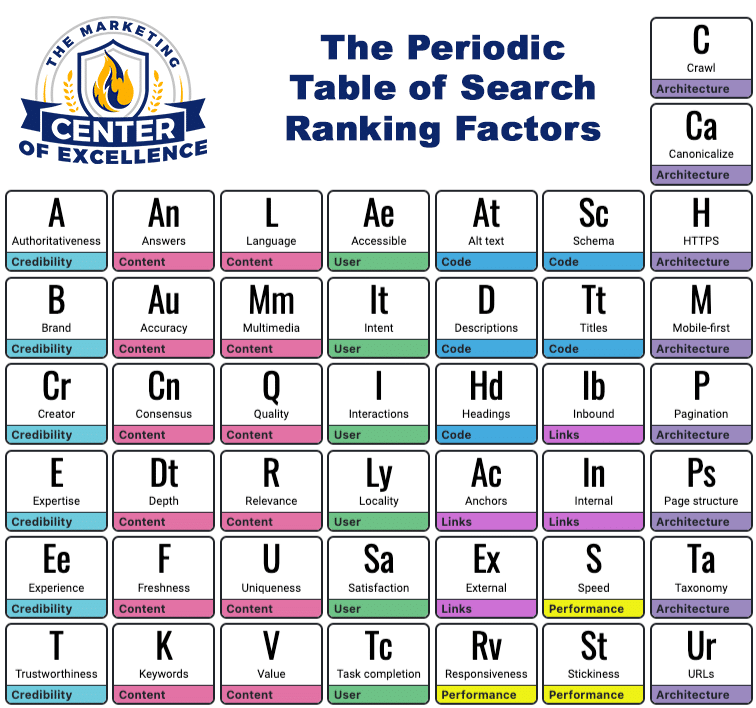

It’s essential to understand that while these components provide a framework for what Google considers quality content, the search algorithm considers hundreds of factors or “signals”, and the landscape is always evolving. Content creators should focus on genuinely serving their audience’s needs and staying updated with best SEO and content creation practices.

SEO’s should focus on generating as many “signals” as possible using this “people-first,” helpful content strategy.

Interpreting Changes in User Intent

One of the main reasons for ranking fluctuations during core updates is Google’s ongoing refinement in discerning user intent. For instance, the ranking for a term like “Yellowstone” might shift from general information about the park to reviews about a new Yellowstone TV series. Collaborating with SEO professionals can help businesses pinpoint these shifts and adjust their content strategy accordingly. User intent will very much impact SERPS so understanding trends in your niche or your client’s industry is important.

So we have mentioned E-E-A-T quite a bit already and it’s now more important than ever. I have been teaching agencies about EAT since 2019 and we have addressed many different components of E-E-A-T in our Signal Genesys software technology. E-E-A-T is critical to SEO success in 2023 and beyond. You must understand it and you must address it practically in your SEO strategy.

Decoding Google’s Approach to Recognizing and Assessing Authors via E-E-A-T

Google’s quest for quality content has led to a deeper focus on authors’ Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T). Here we will dive deeper into how Google might assess content based on these parameters.

E-E-A-T: Google’s Pursuit of Quality

E-E-A-T stands apart, while on-page factors, link signals, and entity-level signals are essential. It doesn’t focus on evaluating individual content but on the thematic relevance of the domain and the content’s creator. E-E-A-T operates beyond specific search queries, emphasizing themes and assessing content collections in relation to entities like companies, organizations, and individuals.

If you’re interested in a video replay of a Masterclass I hosted on E-E-A-T and creating helpful content, here’s the YouTube video of the class:

The Role of the Authorship in Getting Content Crawled, Indexed and Ranked

Google has long recognized the significance of content sources in search rankings. Past initiatives like the Vince update in 2009 favored brand-generated content. Over the years, Google has explored various ways to gather signals for author ratings, including projects like Knol and Google+.

Given the rise of AI-generated content and spam, Google aims to filter out low-quality content from its search index. E-E-A-T helps Google rank content based on the entity, domain, and author without having to crawl every piece.

This is the one area I think you should pause and really pay attention to this… it’s paramount for success in SEO going forward. Google MUST have a way to deal with crawl resources. They simply cannot crawl the entire web every month or potentially at all, ever. It’s growing too much, too fast, thanks to Artificial Intelligence or “AI” as we know it for short. Google must account for the mass proliferation of web content (ie. pages) and they will simply choose to ignore entire sites unless or until that entity and their website start associating their content to real people at the business. Not implementing authorship into your SEO strategy introduces a much higher risk that the content you are producing for the client will simply have zero effect on the rankings because it is being ignored by Googlebot completely (ie. not being crawled/rendered or indexed; and, even if you get these first two steps done, will the algorithm actually rank the content/page and push it into the primary search index? And, even if that happens, where will that content/page end up in the SERPS? Meaning, will the algorithm consider it helpful? Thorough? Demonstrating EEAT and adding value to the first page where someone else already isn’t? Back all of this up and you need authorship today to set all of this in motion in my opinion. Here is a great primer on Google patents around the topic of indexing by Bill Slawski which continues to evolve like most other parts of the algorithm.

Remember: the very first task of a quality rater… to find out WHO is responsible for the page’s main content. Then dive into EEAT to evaluate further, but you can’t evaluate the reputation of a ghost or an otherwise anonymous person/author.

Identifying Authors and Assigning Content

Google classifies authors as person entities. These can be known entities in the Knowledge Graph or new entities in repositories like the Knowledge Vault. Google uses named entity recognition (NER), a subset of natural language processing, to identify and extract entities from unstructured content. Modern systems use word embedding techniques like Word2Vec for this purpose.

Vector Representations and Author Attribution

Content can be represented as vectors, enabling comparisons between content vectors and author vectors in vector spaces. The proximity between document vectors and the corresponding author vector indicates the likelihood of the author creating the documents.

Key Information Sources for Authors

Google relies on various sources to gather information about authors, including:

- Wikipedia articles

- Author profiles

- Speaker profiles

- Social media profiles

Author boxes and consistent author descriptions help Google assign content to authors and verify the authenticity of the [person] entity (ie. the author).

Decoding Google’s E-E-A-T Metrics: A Deeper Dive into Relevant Patents and Author Evaluations/Ratings

Google’s patents offer intriguing insights into the potential mechanisms behind the identification, content assignment, evaluation, and rating of authors based on E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness). These are highly instructive to use as SEO professionals when crafting our SEO strategy because we should be very intentional about how we communicate WHO is responsible for any and all content we publish on the web going forward. Period. Whether for your own agency or on behalf of clients.

Here is a quick overview of some of these relevant patents that Google holds:

Authorship Badges: A Digital Signature

This patent outlines a system where content is linked to authors through a distinctive badge. This linkage is established using identifiers like email addresses or names, with verification facilitated by a browser extension.

Vector-Based Author Representation

In 2016, Google patented a technique, valid until 2036, that encapsulates authors as vectors using training datasets. These vectors, characterized by an author’s unique writing style and word choice, enable the assignment of previously unattributed content. This method also facilitates the clustering of authors with similar styles. Interestingly, this patent has only been applied for in the USA, hinting at its limited global application.

Author Reputation Metrics

A 2008 patent, revived in 2017 under the title “Monetization of online content,” delves into the calculation of an author’s reputation score. Factors influencing this score include:

– The author’s prominence.

– Publications in esteemed media outlets.

– Total number of publications.

– Recency of publications.

– Duration of the author’s career.

– Links generated from the author’s content.

Agent Rank: Beyond Just Content

A patent from 2005, with global registrations including Spain and Canada, describes the assignment of digital content to an agent, which could be a publisher or author. The ranking of this content is influenced by an ‘Agent Rank’, which is determined by the content and its associated backlinks.

Assessing Author Credibility

Another 2008 patent, solely registered in the USA, delves into the algorithmic determination of an author’s credibility. This patent highlights various factors, including:

– The author’s professional background and role within an organization.

– The relevance of the author’s profession to the content topics.

– The author’s educational qualifications and training.

– The duration and frequency of the author’s publications.

– The author’s mentions in awards or top lists.

Re-ranking Search Results: A New Perspective

A 2013 patent, registered both in the USA and globally, emphasizes the re-ranking of search results based on author scoring. This scoring considers citations and the proportion of content an author contributes to a specific topic.

Additional Considerations for Author Evaluations

Beyond the patents, several other factors can influence an author’s E-E-A-T rating:

– The overall quality of content on a specific topic.

– PageRank or link references to the author’s content.

– The author’s association with relevant topics or terms across various mediums.

– The frequency of the author’s name appearing in search queries related to specific topics.

The Future of E-E-A-T in Google Search

With advancements in AI and machine learning, Google can now identify and map semantic structures from vast amounts of unstructured content. This capability means that the origin of content, especially the authors and organizations behind it, will play an even more pivotal role in search rankings, right down to influencing crawl budget. The influence of E-E-A-T on search results is likely to continue grow, potentially rivaling the importance of individual content relevance, especially due to the proliferation of AI generated content that has really increased since the release of ChatGPT in early 2023..

Here’s a practical checklist of items that agencies should focus on implementing into their SEO strategy:

SEO Strategy Checklist: Mastering Google’s Core Updates & E-E-A-T

- Stay Updated on Core Updates

-

- Monitor Google’s announcements for major core updates.

- Analyze ranking fluctuations post-update to identify potential areas of improvement.

- Don’t react to fluctuations in the SERPS. Too many SEO’s make this mistake. Follow best practices and stay away from the tactics you know Google is trying to combat and you shouldn’t have to change much from any one update.

- Address Common Quality Concerns

-

- Eliminate intrusive ads or pop-ups.

- Remove or improve shallow or redundant content.

- Optimize local landing pages for quality and relevance.

- Ensure content adheres to E-E-A-T standards.

- Check for and rectify deceptive affiliate links.

- Avoid overly promotional “money pages”.

- Refrain from creating content that’s overtly SEO-focused without genuine value.

- Deep Dive into E-E-A-T for ALL content

-

- Ensure content reflects firsthand knowledge or experience.

- Highlight the expertise of content creators by building out robust author profiles.

- Build and showcase the site’s authoritativeness through mentions, references, or citations.

- Prioritize trustworthiness by ensuring content accuracy and site security.

- Build the authority of the author profile with digital PR and other high DA/PR links

- Optimize for the Website

-

- Ensure the site is mobile-responsive.

- Test site performance on various mobile devices.

- Technical and on-page SEO are crucial. Don’t ignore site issues.

- Build proper website silo categorization and architecture into your sitemap. Proper keyword research is the absolute prerequisite to successfully implementing this into the site.

- Regular Content Audits

-

- Periodically review content against the Quality Rater Guidelines.

- Update outdated information and improve or remove low-quality content.

- Engage in Digital PR

-

- Build the brand’s online presence through guest posts, interviews, and collaborations.

- Seek opportunities for earned media to enhance brand authority.

- Gather and Act on User Feedback

-

- Use surveys, feedback forms, and user testing to understand user needs.

- Implement changes based on feedback to enhance user experience.

- Emphasize Authorship

-

- Clearly identify content authors.

- Build and showcase the reputation, experience, and expertise of authors.

- Ensure consistent author descriptions across content.

- Stay Updated on Relevant Google Patents

-

- Familiarize yourself with patents related to authorship, content evaluation, and E-E-A-T.

- Adjust strategies based on insights from these patents.

- Prepare for AI’s Role in Content Creation

-

- Understand the implications of AI-generated content on SEO.

- Consider tools and strategies to differentiate genuine, high-quality content from AI-generated content.

- Prioritize User Intent

-

- Regularly review keyword strategies to ensure alignment with evolving user intent.

- Adjust content to better match the current needs and interests of the target audience.

- Build a Positive Entity and Website Reputation

-

- Encourage satisfied customers or clients to leave positive reviews.

- Address negative feedback constructively and make necessary improvements.

- Get reviews on major review sites, not just Google! This is a signal that Google is directly looking for so don’t just focus on getting reviews to the GBP listing.

- Stay Future-Proof

-

- Continuously educate yourself and your team on the latest SEO trends and best practices.

- Regularly revisit and adjust the SEO strategy to stay aligned with industry changes.

By following this checklist, I believe agencies can better navigate the complexities of Google’s core updates and the evolving importance of E-E-A-T, ensuring a robust and effective SEO strategy moving into the future.

I hope this article helps you better understand how to navigate the new world of SEO in 2023 and beyond. One thing is for sure, Google will continue to change and innovate so staying ahead of the curve in these critical areas of change will be a key to not only staying relevant but being a market leader and recognized expert in your agency niche. Feel free to use this article to educate your clients on the dynamics in play. Bringing some fresh strategies and tactics to the relationship after educating them on the changing landscape will instill confidence in you as the trusted marketing advisor.

Quick request! — if you found this article helpful and instructive, please share it! I would greatly appreciate it, especially if you have read this far all the way to the end, thank you.